life is too short for a diary

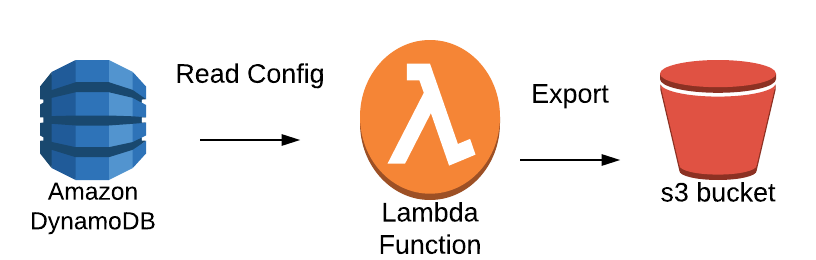

Export DynamoDB Table to S3 Bucket Using Lambda Function

Tags: aws lambda function dynamodb s3

Dynamodb is a great NoSQL service by AWS. Often it's required to export data from the dynamodb table .

First, let us review our use case. Our lambda function will read from table from dynamodb and export JSON to s3.

|

Using boto3 resource

We can create a payload to test our lambda function

{

"TableName": "DynamoDB_Table_name",

"s3_bucket": "s3_bucket_name",

"s3_object": "s3_object_name",

"filename": "output.json"

}

However, sometimes we might encounter errors for certain values in DynamoDB.

TypeError: Object of type Decimal is not JSON serializable.

We can use a JSONEncoder class to update our lamda function.

Using boto3 client

Another way to export data is to use boto3 client. It's a low level AWS services.

However boto3 client will generates dynamodb JSON. A simple python script to convert it back to normalized JSON using dynamodb_json library.

comments powered by Disqus